Testing Rules

Test your rules before they hit production. Rulebricks gives you two modes: Try for quick single-request testing, and Suite for regression testing across multiple scenarios.

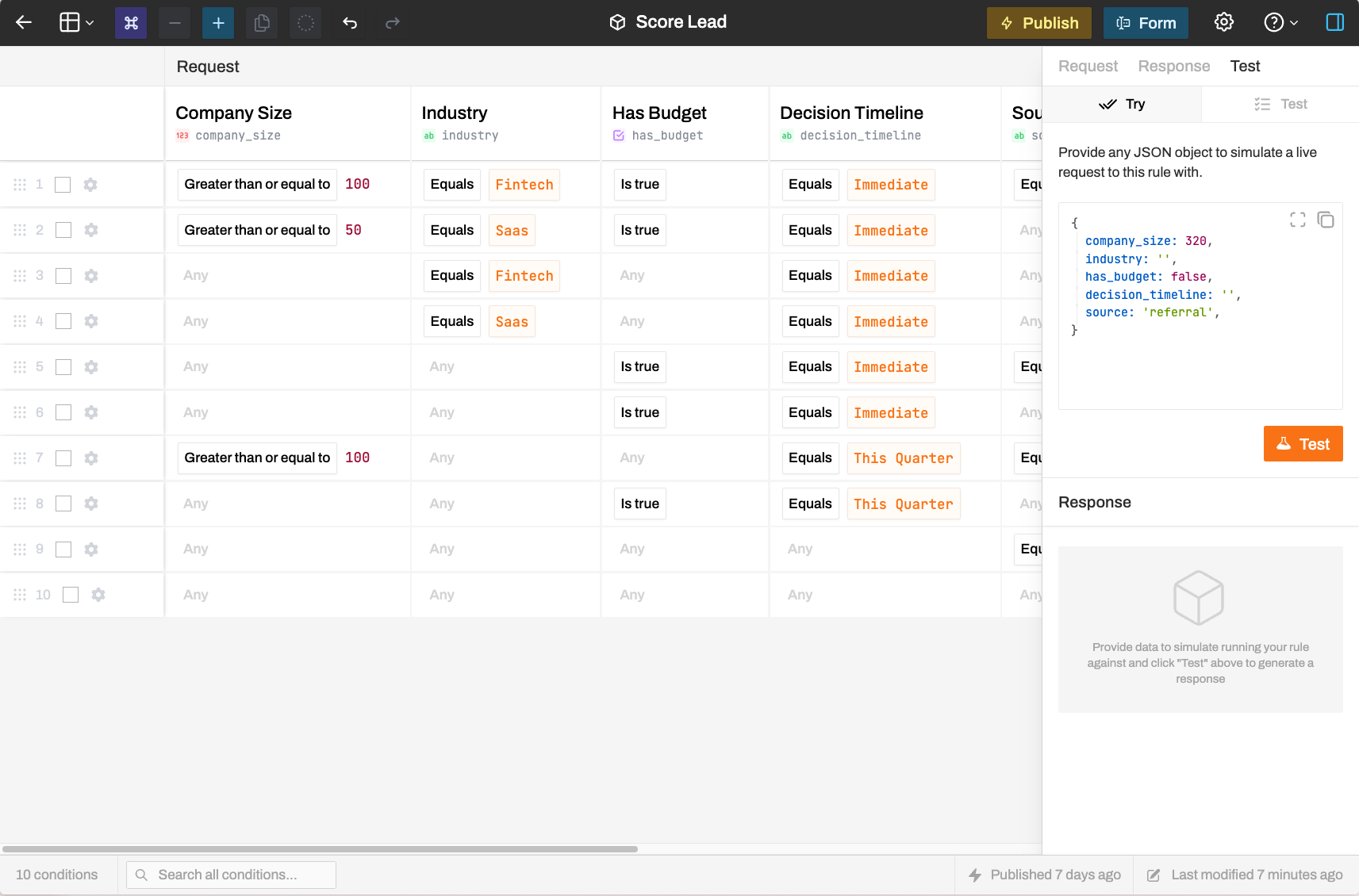

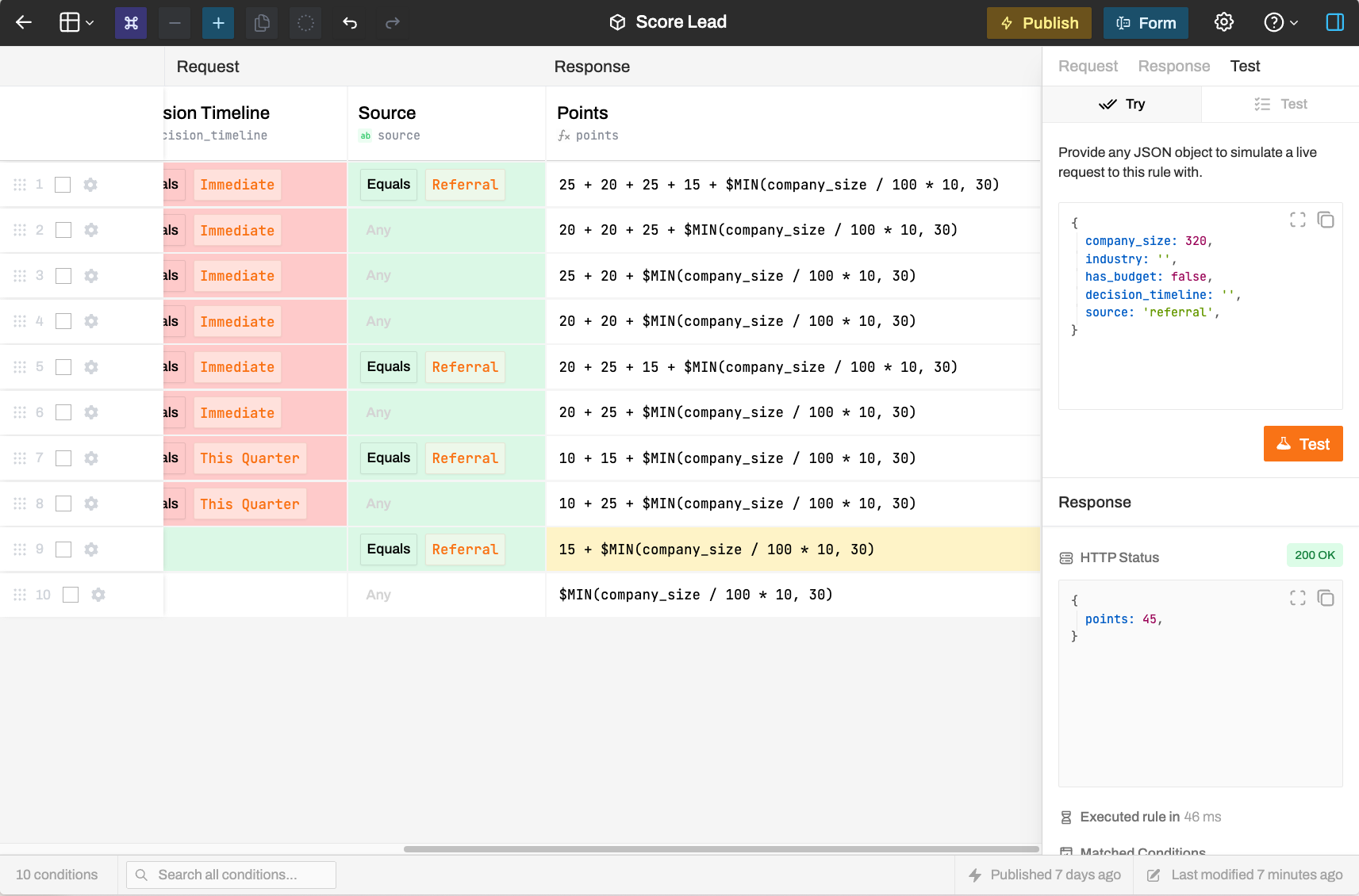

Try Mode

Open the Test tab in the rule editor and select Try.

Paste a request object and click the orange button. The decision table lights up:

- Green cells — Conditions that matched

- Red cells — Conditions that didn't match

- Blue/Yellow cells — The results returned

This visual feedback shows exactly which row matched and why.

If you get an error, your rule doesn't handle that input. Check for missing conditions or add a catch-all row at the bottom.

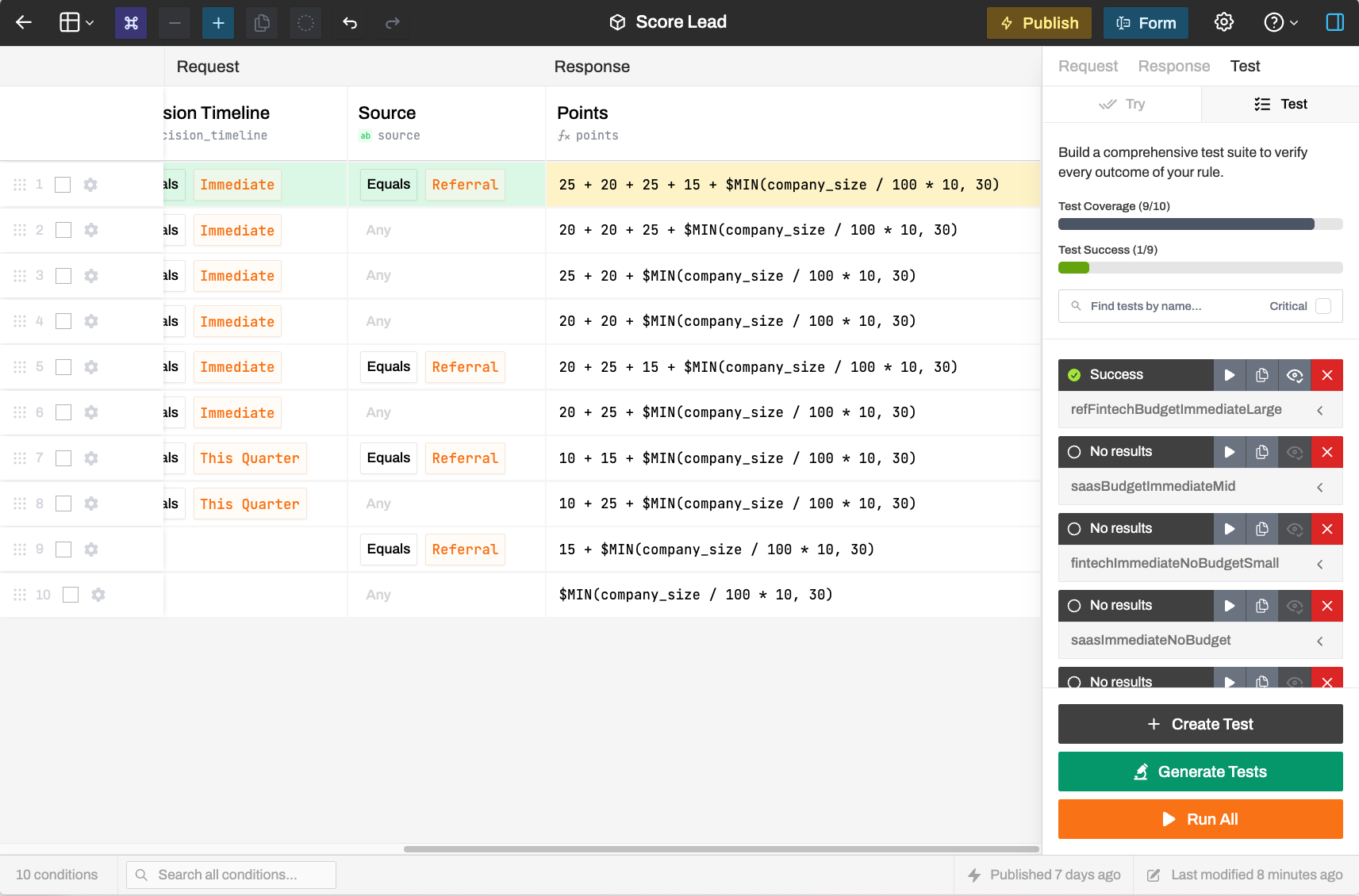

Suite Mode

Switch to Test Suite mode to run multiple test cases at once.

Build your suite by saving tests from Try mode. When a test produces the expected result, save it. Mark tests as Critical if they should block publishing when they fail.

Rapid Test Generation

We find writing tests boring, and we thought you might too– a powerful AI feature here allows you to create many tests very quickly given one or two examples. Just build and name one test case in the Suite, and click "Generate Tests" to instantly get coverage over your entire rule.

For large test suites, we offer a simple API to bulk upload test cases programmatically.

Continuous Testing

Enable Continuous Testing in rule settings to run your test suite automatically before every publish.

When enabled, you'll see a test score next to every published version—the percentage of tests that passed. Critical tests prevent publishing if they fail; non-critical tests report results but don't block.